3Blue1Brown | How large language models work, a visual intro to transformers | Chapter 5, Deep Learning @3blue1brown | Uploaded 6 months ago | Updated 2 hours ago

Breaking down how Large Language Models work

Instead of sponsored ad reads, these lessons are funded directly by viewers: 3b1b.co/support

---

Here are a few other relevant resources

Build a GPT from scratch, by Andrej Karpathy

youtu.be/kCc8FmEb1nY

If you want a conceptual understanding of language models from the ground up, @vcubingx just started a short series of videos on the topic:

youtu.be/1il-s4mgNdI?si=XaVxj6bsdy3VkgEX

If you're interested in the herculean task of interpreting what these large networks might actually be doing, the Transformer Circuits posts by Anthropic are great. In particular, it was only after reading one of these that I started thinking of the combination of the value and output matrices as being a combined low-rank map from the embedding space to itself, which, at least in my mind, made things much clearer than other sources.

https://transformer-circuits.pub/2021/framework/index.html

Site with exercises related to ML programming and GPTs

gptandchill.ai/codingproblems

History of language models by Brit Cruise, @ArtOfTheProblem

youtu.be/OFS90-FX6pg

An early paper on how directions in embedding spaces have meaning:

arxiv.org/pdf/1301.3781.pdf

---

Timestamps

0:00 - Predict, sample, repeat

3:03 - Inside a transformer

6:36 - Chapter layout

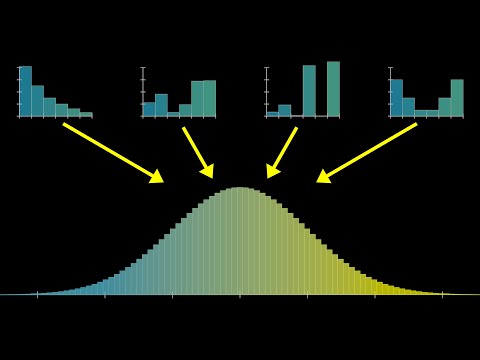

7:20 - The premise of Deep Learning

12:27 - Word embeddings

18:25 - Embeddings beyond words

20:22 - Unembedding

22:22 - Softmax with temperature

26:03 - Up next

Breaking down how Large Language Models work

Instead of sponsored ad reads, these lessons are funded directly by viewers: 3b1b.co/support

---

Here are a few other relevant resources

Build a GPT from scratch, by Andrej Karpathy

youtu.be/kCc8FmEb1nY

If you want a conceptual understanding of language models from the ground up, @vcubingx just started a short series of videos on the topic:

youtu.be/1il-s4mgNdI?si=XaVxj6bsdy3VkgEX

If you're interested in the herculean task of interpreting what these large networks might actually be doing, the Transformer Circuits posts by Anthropic are great. In particular, it was only after reading one of these that I started thinking of the combination of the value and output matrices as being a combined low-rank map from the embedding space to itself, which, at least in my mind, made things much clearer than other sources.

https://transformer-circuits.pub/2021/framework/index.html

Site with exercises related to ML programming and GPTs

gptandchill.ai/codingproblems

History of language models by Brit Cruise, @ArtOfTheProblem

youtu.be/OFS90-FX6pg

An early paper on how directions in embedding spaces have meaning:

arxiv.org/pdf/1301.3781.pdf

---

Timestamps

0:00 - Predict, sample, repeat

3:03 - Inside a transformer

6:36 - Chapter layout

7:20 - The premise of Deep Learning

12:27 - Word embeddings

18:25 - Embeddings beyond words

20:22 - Unembedding

22:22 - Softmax with temperature

26:03 - Up next