Andrej Karpathy | Building makemore Part 2: MLP @AndrejKarpathy | Uploaded 2 years ago | Updated 30 seconds ago

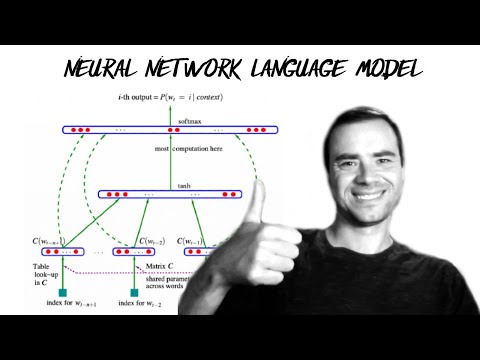

We implement a multilayer perceptron (MLP) character-level language model. In this video we also introduce many basics of machine learning (e.g. model training, learning rate tuning, hyperparameters, evaluation, train/dev/test splits, under/overfitting, etc.).

Links:

- makemore on github: github.com/karpathy/makemore

- jupyter notebook I built in this video: github.com/karpathy/nn-zero-to-hero/blob/master/lectures/makemore/makemore_part2_mlp.ipynb

- collab notebook (new)!!!: colab.research.google.com/drive/1YIfmkftLrz6MPTOO9Vwqrop2Q5llHIGK?usp=sharing

- Bengio et al. 2003 MLP language model paper (pdf): jmlr.org/papers/volume3/bengio03a/bengio03a.pdf

- my website: karpathy.ai

- my twitter: twitter.com/karpathy

- (new) Neural Networks: Zero to Hero series Discord channel: discord.gg/3zy8kqD9Cp , for people who'd like to chat more and go beyond youtube comments

Useful links:

- PyTorch internals ref http://blog.ezyang.com/2019/05/pytorch-internals

Exercises:

- E01: Tune the hyperparameters of the training to beat my best validation loss of 2.2

- E02: I was not careful with the intialization of the network in this video. (1) What is the loss you'd get if the predicted probabilities at initialization were perfectly uniform? What loss do we achieve? (2) Can you tune the initialization to get a starting loss that is much more similar to (1)?

- E03: Read the Bengio et al 2003 paper (link above), implement and try any idea from the paper. Did it work?

Chapters:

00:00:00 intro

00:01:48 Bengio et al. 2003 (MLP language model) paper walkthrough

00:09:03 (re-)building our training dataset

00:12:19 implementing the embedding lookup table

00:18:35 implementing the hidden layer + internals of torch.Tensor: storage, views

00:29:15 implementing the output layer

00:29:53 implementing the negative log likelihood loss

00:32:17 summary of the full network

00:32:49 introducing F.cross_entropy and why

00:37:56 implementing the training loop, overfitting one batch

00:41:25 training on the full dataset, minibatches

00:45:40 finding a good initial learning rate

00:53:20 splitting up the dataset into train/val/test splits and why

01:00:49 experiment: larger hidden layer

01:05:27 visualizing the character embeddings

01:07:16 experiment: larger embedding size

01:11:46 summary of our final code, conclusion

01:13:24 sampling from the model

01:14:55 google collab (new!!) notebook advertisement

We implement a multilayer perceptron (MLP) character-level language model. In this video we also introduce many basics of machine learning (e.g. model training, learning rate tuning, hyperparameters, evaluation, train/dev/test splits, under/overfitting, etc.).

Links:

- makemore on github: github.com/karpathy/makemore

- jupyter notebook I built in this video: github.com/karpathy/nn-zero-to-hero/blob/master/lectures/makemore/makemore_part2_mlp.ipynb

- collab notebook (new)!!!: colab.research.google.com/drive/1YIfmkftLrz6MPTOO9Vwqrop2Q5llHIGK?usp=sharing

- Bengio et al. 2003 MLP language model paper (pdf): jmlr.org/papers/volume3/bengio03a/bengio03a.pdf

- my website: karpathy.ai

- my twitter: twitter.com/karpathy

- (new) Neural Networks: Zero to Hero series Discord channel: discord.gg/3zy8kqD9Cp , for people who'd like to chat more and go beyond youtube comments

Useful links:

- PyTorch internals ref http://blog.ezyang.com/2019/05/pytorch-internals

Exercises:

- E01: Tune the hyperparameters of the training to beat my best validation loss of 2.2

- E02: I was not careful with the intialization of the network in this video. (1) What is the loss you'd get if the predicted probabilities at initialization were perfectly uniform? What loss do we achieve? (2) Can you tune the initialization to get a starting loss that is much more similar to (1)?

- E03: Read the Bengio et al 2003 paper (link above), implement and try any idea from the paper. Did it work?

Chapters:

00:00:00 intro

00:01:48 Bengio et al. 2003 (MLP language model) paper walkthrough

00:09:03 (re-)building our training dataset

00:12:19 implementing the embedding lookup table

00:18:35 implementing the hidden layer + internals of torch.Tensor: storage, views

00:29:15 implementing the output layer

00:29:53 implementing the negative log likelihood loss

00:32:17 summary of the full network

00:32:49 introducing F.cross_entropy and why

00:37:56 implementing the training loop, overfitting one batch

00:41:25 training on the full dataset, minibatches

00:45:40 finding a good initial learning rate

00:53:20 splitting up the dataset into train/val/test splits and why

01:00:49 experiment: larger hidden layer

01:05:27 visualizing the character embeddings

01:07:16 experiment: larger embedding size

01:11:46 summary of our final code, conclusion

01:13:24 sampling from the model

01:14:55 google collab (new!!) notebook advertisement

![Lets build the GPT Tokenizer

The Tokenizer is a necessary and pervasive component of Large Language Models (LLMs), where it translates between strings and tokens (text chunks). Tokenizers are a completely separate stage of the LLM pipeline: they have their own training sets, training algorithms (Byte Pair Encoding), and after training implement two fundamental functions: encode() from strings to tokens, and decode() back from tokens to strings. In this lecture we build from scratch the Tokenizer used in the GPT series from OpenAI. In the process, we will see that a lot of weird behaviors and problems of LLMs actually trace back to tokenization. Well go through a number of these issues, discuss why tokenization is at fault, and why someone out there ideally finds a way to delete this stage entirely.

Chapters:

00:00:00 intro: Tokenization, GPT-2 paper, tokenization-related issues

00:05:50 tokenization by example in a Web UI (tiktokenizer)

00:14:56 strings in Python, Unicode code points

00:18:15 Unicode byte encodings, ASCII, UTF-8, UTF-16, UTF-32

00:22:47 daydreaming: deleting tokenization

00:23:50 Byte Pair Encoding (BPE) algorithm walkthrough

00:27:02 starting the implementation

00:28:35 counting consecutive pairs, finding most common pair

00:30:36 merging the most common pair

00:34:58 training the tokenizer: adding the while loop, compression ratio

00:39:20 tokenizer/LLM diagram: it is a completely separate stage

00:42:47 decoding tokens to strings

00:48:21 encoding strings to tokens

00:57:36 regex patterns to force splits across categories

01:11:38 tiktoken library intro, differences between GPT-2/GPT-4 regex

01:14:59 GPT-2 encoder.py released by OpenAI walkthrough

01:18:26 special tokens, tiktoken handling of, GPT-2/GPT-4 differences

01:25:28 minbpe exercise time! write your own GPT-4 tokenizer

01:28:42 sentencepiece library intro, used to train Llama 2 vocabulary

01:43:27 how to set vocabulary set? revisiting gpt.py transformer

01:48:11 training new tokens, example of prompt compression

01:49:58 multimodal [image, video, audio] tokenization with vector quantization

01:51:41 revisiting and explaining the quirks of LLM tokenization

02:10:20 final recommendations

02:12:50 ??? :)

Exercises:

- Advised flow: reference this document and try to implement the steps before I give away the partial solutions in the video. The full solutions if youre getting stuck are in the minbpe code https://github.com/karpathy/minbpe/blob/master/exercise.md

Links:

- Google colab for the video: https://colab.research.google.com/drive/1y0KnCFZvGVf_odSfcNAws6kcDD7HsI0L?usp=sharing

- GitHub repo for the video: minBPE https://github.com/karpathy/minbpe

- Playlist of the whole Zero to Hero series so far: https://www.youtube.com/watch?v=VMj-3S1tku0&list=PLAqhIrjkxbuWI23v9cThsA9GvCAUhRvKZ

- our Discord channel: https://discord.gg/3zy8kqD9Cp

- my Twitter: https://twitter.com/karpathy

Supplementary links:

- tiktokenizer https://tiktokenizer.vercel.app

- tiktoken from OpenAI: https://github.com/openai/tiktoken

- sentencepiece from Google https://github.com/google/sentencepiece Lets build the GPT Tokenizer](https://i.ytimg.com/vi/zduSFxRajkE/hqdefault.jpg)

![[1hr Talk] Intro to Large Language Models

This is a 1 hour general-audience introduction to Large Language Models: the core technical component behind systems like ChatGPT, Claude, and Bard. What they are, where they are headed, comparisons and analogies to present-day operating systems, and some of the security-related challenges of this new computing paradigm.

As of November 2023 (this field moves fast!).

Context: This video is based on the slides of a talk I gave recently at the AI Security Summit. The talk was not recorded but a lot of people came to me after and told me they liked it. Seeing as I had already put in one long weekend of work to make the slides, I decided to just tune them a bit, record this round 2 of the talk and upload it here on YouTube. Pardon the random background, thats my hotel room during the thanksgiving break.

- Slides as PDF: https://drive.google.com/file/d/1pxx_ZI7O-Nwl7ZLNk5hI3WzAsTLwvNU7/view?usp=share_link (42MB)

- Slides. as Keynote: https://drive.google.com/file/d/1FPUpFMiCkMRKPFjhi9MAhby68MHVqe8u/view?usp=share_link (140MB)

Few things I wish I said (Ill add items here as they come up):

- The dreams and hallucinations do not get fixed with finetuning. Finetuning just directs the dreams into helpful assistant dreams. Always be careful with what LLMs tell you, especially if they are telling you something from memory alone. That said, similar to a human, if the LLM used browsing or retrieval and the answer made its way into the working memory of its context window, you can trust the LLM a bit more to process that information into the final answer. But TLDR right now, do not trust what LLMs say or do. For example, in the tools section, Id always recommend double-checking the math/code the LLM did.

- How does the LLM use a tool like the browser? It emits special words, e.g. |BROWSER|. When the code above that is inferencing the LLM detects these words it captures the output that follows, sends it off to a tool, comes back with the result and continues the generation. How does the LLM know to emit these special words? Finetuning datasets teach it how and when to browse, by example. And/or the instructions for tool use can also be automatically placed in the context window (in the “system message”).

- You might also enjoy my 2015 blog post Unreasonable Effectiveness of Recurrent Neural Networks. The way we obtain base models today is pretty much identical on a high level, except the RNN is swapped for a Transformer. http://karpathy.github.io/2015/05/21/rnn-effectiveness/

- What is in the run.c file? A bit more full-featured 1000-line version hre: https://github.com/karpathy/llama2.c/blob/master/run.c

Chapters:

Part 1: LLMs

00:00:00 Intro: Large Language Model (LLM) talk

00:00:20 LLM Inference

00:04:17 LLM Training

00:08:58 LLM dreams

00:11:22 How do they work?

00:14:14 Finetuning into an Assistant

00:17:52 Summary so far

00:21:05 Appendix: Comparisons, Labeling docs, RLHF, Synthetic data, Leaderboard

Part 2: Future of LLMs

00:25:43 LLM Scaling Laws

00:27:43 Tool Use (Browser, Calculator, Interpreter, DALL-E)

00:33:32 Multimodality (Vision, Audio)

00:35:00 Thinking, System 1/2

00:38:02 Self-improvement, LLM AlphaGo

00:40:45 LLM Customization, GPTs store

00:42:15 LLM OS

Part 3: LLM Security

00:45:43 LLM Security Intro

00:46:14 Jailbreaks

00:51:30 Prompt Injection

00:56:23 Data poisoning

00:58:37 LLM Security conclusions

End

00:59:23 Outro [1hr Talk] Intro to Large Language Models](https://i.ytimg.com/vi/zjkBMFhNj_g/hqdefault.jpg)